Products Category

- FM Transmitter

- 0-50w 50w-1000w 2kw-10kw 10kw+

- TV Transmitter

- 0-50w 50-1kw 2kw-10kw

- FM Antenna

- TV Antenna

- Antenna Accessory

- Cable Connector Power Splitter Dummy Load

- RF Transistor

- Power Supply

- Audio Equipments

- DTV Front End Equipment

- Link System

- STL system Microwave Link system

- FM Radio

- Power Meter

- Other Products

- Special for Coronavirus

Products Tags

Fmuser Sites

- es.fmuser.net

- it.fmuser.net

- fr.fmuser.net

- de.fmuser.net

- af.fmuser.net ->Afrikaans

- sq.fmuser.net ->Albanian

- ar.fmuser.net ->Arabic

- hy.fmuser.net ->Armenian

- az.fmuser.net ->Azerbaijani

- eu.fmuser.net ->Basque

- be.fmuser.net ->Belarusian

- bg.fmuser.net ->Bulgarian

- ca.fmuser.net ->Catalan

- zh-CN.fmuser.net ->Chinese (Simplified)

- zh-TW.fmuser.net ->Chinese (Traditional)

- hr.fmuser.net ->Croatian

- cs.fmuser.net ->Czech

- da.fmuser.net ->Danish

- nl.fmuser.net ->Dutch

- et.fmuser.net ->Estonian

- tl.fmuser.net ->Filipino

- fi.fmuser.net ->Finnish

- fr.fmuser.net ->French

- gl.fmuser.net ->Galician

- ka.fmuser.net ->Georgian

- de.fmuser.net ->German

- el.fmuser.net ->Greek

- ht.fmuser.net ->Haitian Creole

- iw.fmuser.net ->Hebrew

- hi.fmuser.net ->Hindi

- hu.fmuser.net ->Hungarian

- is.fmuser.net ->Icelandic

- id.fmuser.net ->Indonesian

- ga.fmuser.net ->Irish

- it.fmuser.net ->Italian

- ja.fmuser.net ->Japanese

- ko.fmuser.net ->Korean

- lv.fmuser.net ->Latvian

- lt.fmuser.net ->Lithuanian

- mk.fmuser.net ->Macedonian

- ms.fmuser.net ->Malay

- mt.fmuser.net ->Maltese

- no.fmuser.net ->Norwegian

- fa.fmuser.net ->Persian

- pl.fmuser.net ->Polish

- pt.fmuser.net ->Portuguese

- ro.fmuser.net ->Romanian

- ru.fmuser.net ->Russian

- sr.fmuser.net ->Serbian

- sk.fmuser.net ->Slovak

- sl.fmuser.net ->Slovenian

- es.fmuser.net ->Spanish

- sw.fmuser.net ->Swahili

- sv.fmuser.net ->Swedish

- th.fmuser.net ->Thai

- tr.fmuser.net ->Turkish

- uk.fmuser.net ->Ukrainian

- ur.fmuser.net ->Urdu

- vi.fmuser.net ->Vietnamese

- cy.fmuser.net ->Welsh

- yi.fmuser.net ->Yiddish

What Is SRT protocol and Why It Is So Important?

"What is the SRT open-source Internet transfer protocol? What does it mean for the video streaming industry? FMUSER will introduce you all the information you must know about the SRT protocol, including the meaning of the SRT protocol, the background of the SRT protocol, and the SRT protocol may bring to the future video streaming solutions, the video industry, and the development of network video transmission Influence and change ---- FMUSER"

What is SRT Protocol?

How SRT Protocol Works?

The Development History of SRT protocol

What Can SRT Protocol Brings Us with?

Why Is The SRT Protocol So Important?

What Are The Benefits Of Using SRT Protocol?

SRT Protocol Supported Streaming Solutions From FMUSER

Compared SRT protocol With Common Transmission Formats

HTTP Live Streaming (HLS)

How HLS Works?

MPEG-DASH (Dynamic Adaptive Streaming Over HTTP)

How MPEG-DASH Works And Applications

Which Streaming Protocol Is Right For You?

True Things about the SRT Protocol

● Definition: SRT protocol is the abbreviation of (Secure Reliable Transport protocol). Secure Reliable Transport protcol (SRT protocol) is a high-quality, low-latency, secure, real-time video royalty-free open-source video transmission protocol. It supports noisy or unpredictable networks (such as Low-latency, high-performance streaming is realized on the public Internet. SRT protocol is a very popular open-source low-latency video transmission protocol nowadays. Using SRT protocol reliable transmission technology, it can successfully realize safe and reliable high-definition video transmission and distribution under ordinary Internet environments and between multiple locations.

How Comes SRT Protocol?

● SRT Alliance is an organization established by Haivision and Wowza to manage and support open source applications of the SRT protocol.

This organization is committed to promoting the interoperability of video streaming solutions and promoting the collaboration of pioneers in the video industry to achieve low-latency network video transmission.

#Working Principle of SRT protocol

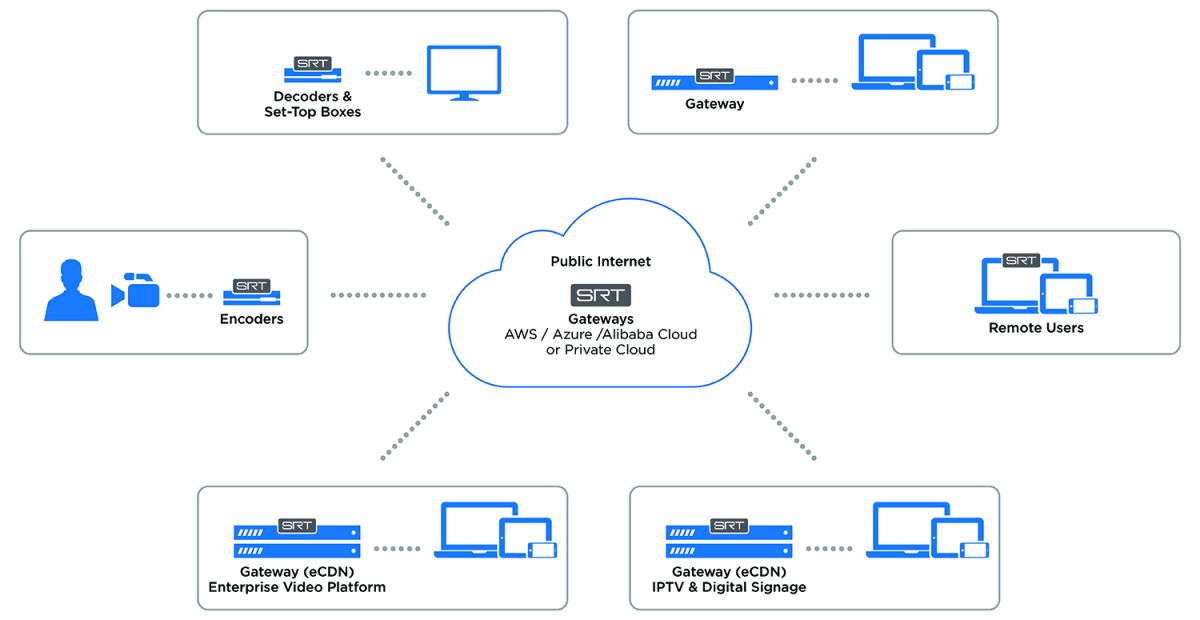

Any firewall between the SRT protocol source device and the target device must be traversed. SRT protocol has three patterns to achieve this:

Rendezvous / Caller / Listener

See Also: How To Load/Add M3U/M3U8 IPTV Playlists Manually On Supported Devices

3. The Development History of SRT protocol

Currently, 50 SRT-enabled products are already on the market, including IP cameras, encoders, decoders, video gateways, OTT platforms, and CDNs. The SRT protocol is used by thousands of organizations in many applications and markets around the world.

FMUSER is one of the strong supporters of SRT protocol. We have high requirements for interoperability and standards. FMUSER has set out to implement support for the SRT protocol in its current and upcoming encoding and decoding solutions.

4. What Can SRT Protocol Brings Us with?

We thought it was about time to revisit the hot topic of Secure Reliable Transport protocol (SRT protocol) this week. A few small SRT protocol announcements have surfaced since the open-source protocol stole the spotlight in Vegas for the second year running.

A little over a year has passed since SRT protocol achieved one of its most significant deployments to date, with ESPN rolling out SRT-equipped devices to 14 athletic conferences to produce over 2,200 events via low-cost internet connections, replacing traditional satellite uplink services and resulting in cost savings of somewhere between $8 million to $9 million. If ESPN can achieve cost savings on this scale for relatively low-key events, imagine the possibilities for major scale live occasions – cash which can ultimately be invested elsewhere in improving the viewer experience.

But with streaming industry pioneers like Netflix and YouTube delivering HTTP content over CDNs to millions of viewers without a helping hand from SRT protocol, what’s all the fuss about? A whitepaper from broadcast video vendor Haivision, a founding member of the SRT Alliance, essentially aims to debunk the myth that HTTP streaming technology using RTMP is the be-all and end-all for OTT video. In fact, incurring delays as high as 30 seconds is not uncommon in HTTP streaming, caused primarily by a multitude of pressing steps and various buffers along the signal path.

See Also: Multi-channel SRT-supported video encoder for live streaming

In addition, Haivision warns that Transmission Control Protocol (TCP), the standard used in delivering HTTP, can cause a sharp spike in delays as TCP requires that every last packet of a stream is delivered to the end-user in the exact original order. This ultimately means TCP perpetually attempts to send missing data as there is no capability to skip over bad bytes.

A more trivial downside is that SRT protocol already existed as an acronym in the video industry long before the low latency protocol came along, relating to an extension for subtitle computer files called SubRip, so an online search for information on the protocol could easily lead you astray to an entirely different technology stack.

Moving swiftly on now to how SRT has made a name for itself. The diagram below visualizes how an error is generated in the output signal of an uncorrected stream whenever a packet is lost (top), while Forward Error Correction (FEC) adds a constant amount of data to the stream to recreate lost packets, as shown in the middle. Then we have Automatic Repeat reQuest (ARQ) which retransmits lost packets upon request from the receiver, which prevents constant bandwidth consumption of FEC.

A third and final catch of HTTP relates to the manner in which TCP drops packet transmission rates when congestion occurs. “While this behavior is good for reducing overall congestion in a network, it is not appropriate for a video signal, which cannot survive a drop in speed below its nominal bit rate,” it warns.

“The benefits are significant for both technology suppliers and users, greatly simplifying implementation and reducing costs, thereby improving product availability and helping to keep prices low. And, since every implementer uses the same code base, interoperability is simplified,” is probably a better conclusion for the whitepaper than the one it actually chose.

5. Why Is The SRT Protocol So Important?

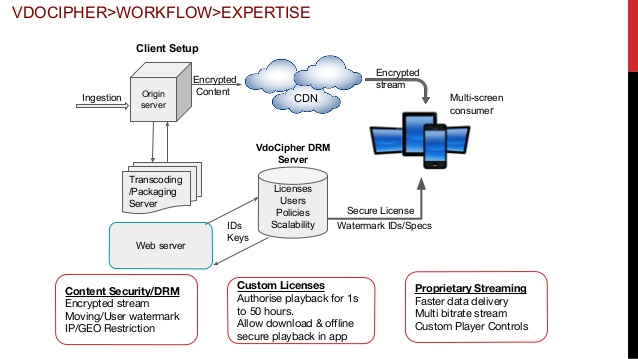

The application of the SRT protocol in the audiovisual and IT sectors has received strong feedback. The main reasons for the excitement of IT thought leaders among enterprise and government end users are; online video platforms; content delivery networks; enterprise video content management systems; and the Internet, the basis for streaming transmission Compared with the hardware, software, and services of facility companies

In businesses, governments, schools, and defense, the consumption of high-performance video is rising sharply. Many protocols have solved the problem of compatibility of streaming video to a large number of viewers who consume content from different devices and devices.

However, one of the best ways to take advantage of the local assets of various organizations and the large investments made by service providers in the cloud is to provide streaming distribution tools with very low latency video and very reliable. SRT protocol uses some of the best aspects of User Datagram Protocol (UDP), such as low latency, but adds error checking to match the reliability of the Transmission Control Protocol/Internet Protocol (TCP/IP). Although TCP/IP can handle all data profiles and is most suitable for its work

Note: SRT protocol can replace the aging RTMP protocol. It solves security issues and focuses on performance video-even through public Internet infrastructure and can specifically handle high-performance video.

6. What Are The Benefits Of Using SRT Protocol?

Three characteristics: SAFETY, RELIABILITY, and LOW LATENCY.

| Terms | Features |

|

In terms of SECURITY |

SRT protocol supports AES encryption to ensure end-to-end video transmission security. |

|

In terms of RELIABILITY |

SRT protocol uses Forward Correction Technology (FEC) to ensure the stability of the transmission |

|

In terms of LOW LATENCY |

SRT protocol is built on top of the UDT protocol, it solves the problem of high transmission delay of UDT protocol. UDT protocol is based on UDP network communication protocol |

#Sheet 1 - What are the features ofSRT Protocol

SRT protocol allows a direct connection between the signal source and the target, which is in sharp contrast to many existing video transmission systems, which require a centralized server to collect signals from remote locations and redirect them to one or more Destinations. The central server-based architecture has a single point of failure, which can also become a bottleneck during periods of high traffic. The transmission of signals through the hub also increases the end-to-end signal transmission time and may double the bandwidth cost, because two links need to be implemented: one from the source to the central hub and the other from the center to the destination. By using direct connections from source to destination, SRT protocol can reduce latency, eliminate central bottlenecks, and reduce network costs.

|

FOUR MAIN FEATURES OF THE SRT PROTOCOL |

||

| Functional |

|

Raw quality video – SRT protocol is designed to prevent jitter, packet loss, and bandwidth fluctuations caused by congestion on noisy networks for the best viewing experience. This is accomplished by advanced low latency retransmission technology, which can compensate and manage packet loss. SRT protocol can withstand up to 10% packet loss without a visual impact on the flow. |

| Effective |

|

Despite dealing with network challenges, video and audio are delivered with low latency with combined advantages of TCP/IP delivery and the speed of UDP. Low latency – although it can cope with network challenges, video and audio transmission still have low latency. It has the comprehensive advantages of TCP / IP delivery reliability and UDP speed. |

| Secure |

|

Secure end-to-end transmission – industry-standard AES 128 / 256-bit encryption ensures protection of content on the Internet. SRT protocol provides simplified firewall traversal.Industry-standard AES 128/256-bit encryption ensures secure end-to-end content transmission over the internet, including simplified firewall traversal. Because SRT protocol ensures security and reliability, the public Internet can now be used for extended streaming media applications, such as streaming to socialist cloud sites (for example, limescale unicast multi-cloud platform is distributed concurrently to multiple social media, such as Facebook) Live, youtube, twitch and periscope (from a real-time video feed), streaming or remoting entire video wall content, or ROI of the video wall, etc. |

| Advanced |

|

Open source – SRT protocol is a royalty-free, next-generation, and open-source protocols provide cost-effective, interoperable, and future-oriented solutions. |

| Cost-effecitive |

Interoperability – knowing that multi-vendor products will work seamlessly, users can safely deploy SRT protocol across the entire video and audio stream workflow. |

|

#Sheet 2 - Why Do We Choose SRT Protocol?

7. SRT Protocol Supported Streaming Solutions From FMUSER

FMUSER H.264 h.265 encoder/decoder / multi HD encoder/decoder pair supports many popular streaming protocols, including SRT protocol. With this compact, robust, low-power encoder/decoder pair, users can confidently transmit real-time streams up to 4K or Quad HD from multiple SDI cameras over a managed or unmanaged network.

|

FMUSER IPTV Encoder / Decoder / Transcoder |

||

|

|

|

|

FBE200 H.264/H.265 IPTV Hardware Encoder |

FBE204 H.264/H.265 IPTV Hardware Encoder |

FBE216 H.264/H.265 IPTV Hardware Encoder |

|

1-Channel |

4 Channels | 16 channels |

#Sheet 3 - FMUSER Audio and Video Broadcast Transmission Solutions

Infield production applications, the FMUSER encoder family provides the lowest glass to glass delay on the market, safely providing streams to production studios from remote events. The stream generated by the FMUSER encoder includes a program timestamp to ensure that the signals from the synchronous camera can be realigned when decoded by the FMUSER decoder. In addition, these feeds can be synchronized to ensure seamless integration into the studio environment.

Note: FMUSER encoder/decoder has a powerful streaming media service function. In addition to supporting general protocols such as RTSP/RTMP, it also supports security Onvif protocol, SIP protocol, NDI protocol (customizable), SRT protocol (customizable), GB/T28181 (Customizable), and other streaming media service agreements; will help you gain a leading edge in the ultra-high-definition video IP-based business.

Multiple Applications of FMUSER SRT-Supported Hardware Encoders FBE200. More>>

FMUSER's product technology is centered on high-quality products and has been constantly innovating and surpassing as the path. From R&D, production, sales, service, and other business links are fully self-integrated, the service is not disconnected from beginning to end. Always take the needs of users as the driving force of innovation, only make products that meet the actual needs of users, and only make products that users can trust. At the same time, it can provide customers with high-quality product development and customized services.

See Also: What is the Difference between AM and FM?

8. Compared SRT protocol With Common Transmission Formats

For network video transmission, more efficient streaming protocols are needed. As companies and content delivery network (CDN) providers prepare for a future full of live streaming, this need has never been more urgent. The future of real-time streaming, SRT protocol, HLS, and MPEG dash has come. Let's take a look at what these real-time streaming protocols are, their benefits, and their applications

There are currently two live video broadcasts on the Internet.

● RTMP-based live broadcast.

● The live broadcast of the WebRTC protocol.

|

RTMP-based live broadcast |

WebRTC protocol |

|

1.This live broadcast method uses RTMP protocol for upstream push and RTMP, HTTP+FLV, or HLS for downstream playback 2. The live broadcast delay is generally greater than 3 seconds and is |

1.This live broadcast method uses the UDP protocol for streaming media distribution 2.The live broadcast delay is less than 1 second, and the number of simultaneous connections is generally less than 10

|

|

Note: It is mainly used in applications such as low latency and large concurrency, such as live events, stock information synchronization, large class education, etc. |

Note: It is mainly used in applications such as video calls and shows connecting microphones. Scenes. |

#Sheet 4 - Comparison of RTMP and WebRTC

HTTP Live Streaming (HLS) is an adaptive, HTTP-based streaming protocol that sends video and audio content over the network in small, TCP-based media segments that get reassembled at the streaming destination. The cost to deploy HLS is low because it uses existing TCP-based network technology, which is attractive for CDNs looking to replace old (and expensive) RTMP media servers. But because HLS uses TCP, Quality of Experience (QoE) is favored over low latency and lag times can be high (as in seconds instead of milliseconds).

HLS was originally developed by Apple Inc. as a protocol to stream media to Apple devices. Apple has since developed HLS (push), which is an open-standard streaming protocol on the contribution side that’s available to all devices. Currently, HLS supports video that is encoded using H.264 or HEVC codecs.

# HTTP Live Streaming (HLS)

An advantage of HLS is that it is designed to adapt to different network conditions. Different versions of the stream are sent at different resolutions and bitrates. Viewers can choose the quality of the stream they want. HLS also supports multiple audio tracks, which means your stream could have multiple language tracks that users can choose from. Other perks include support for closed captions, metadata, Digital Rights Management (DRM), and even embedded advertisements (in the not too distant future). The framework is all there.

Note: Secure streaming over HTTPS is supported, as well as MD5 hashing and SHA hashing algorithms for user name and password authentication.

See Also: How To Solve GOGO IPTV Problems On Icone Pro, Plus And Wegoo Receiver?

The approach is a lot like a file transfer. Media segments stream over HTTP port 80 (or port 443 for HTTPS), which is typically already open to network traffic. As such, the content can easily traverse firewalls with little to no IT involvement.

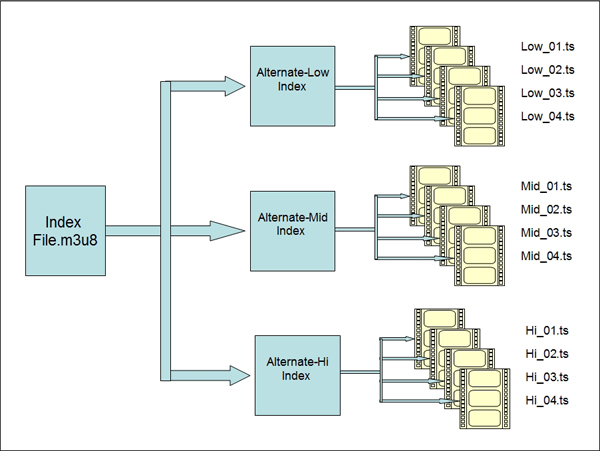

# Get to Know How HLS Works

HLS uses an MPEG2-TS transport stream container with a configurable media segment duration, as well as a configurable playlist size for reassembling the media segments at the ingestion server. Fragmented MP4 is supported.

#Get to Know How HSL Works

Note: Because HLS uses TCP-based technology, the network packet loss and recovery method are intensive. That is one of the reasons for the increased latency. Although some control over the media segment size is available, the ability to reduce latency is limited – especially if the ingestion server requires a specific size of the media segment.

HLS is still the standard for streaming to mobile devices and tablets. You can also use HLS to stream to a CDN that does not support RTMP when low latency isn’t a requirement. It’s important to note that RTMP is already being deprecated by more and more CDNs. HLS is also well suited to securely stream corporate training and town halls over private Local Area Networks (LANs) when low latency isn’t a requirement and network conditions are poor (assuming the network supports HLS).

11. MPEG-DASH (Dynamic Adaptive Streaming Over HTTP)

MPEG-DASH is an open standard, adaptive HTTP-based streaming protocol that sends video and audio content over the network in small, TCP-based media segments that get reassembled at the streaming destination. The International Standards Organization (ISO) and the team at MPEG designed MPEG-DASH to be codec and resolution agnostic, which means MPEG-DASH can stream video (and audio) of any format (H.264, H.265, etc.) and supports resolutions up to 4K. Otherwise, MPEG-DASH functions much the same as HLS.

The cost to deploy MPEG-DASH is low because it uses existing TCP-based network technology, which is attractive for CDNs. But because packets are transported over TCP, Quality of Experience (QoE) is favored over low latency and lag times can be high.

MPEG-DASH is also designed to adapt to different network conditions. Different versions of the stream are sent at different resolutions and bitrates. Viewers can choose the quality of the stream they want. Multiple audio tracks are also supported, as well as enhanced features like closed captions, metadata, and Digital Rights Management (DRM). The infrastructure is there for future developments, like embedded advertisements

Note: Secure streaming over HTTPS is supported, as well as MD5 hashing and SHA hashing algorithms for user name and password authentication.

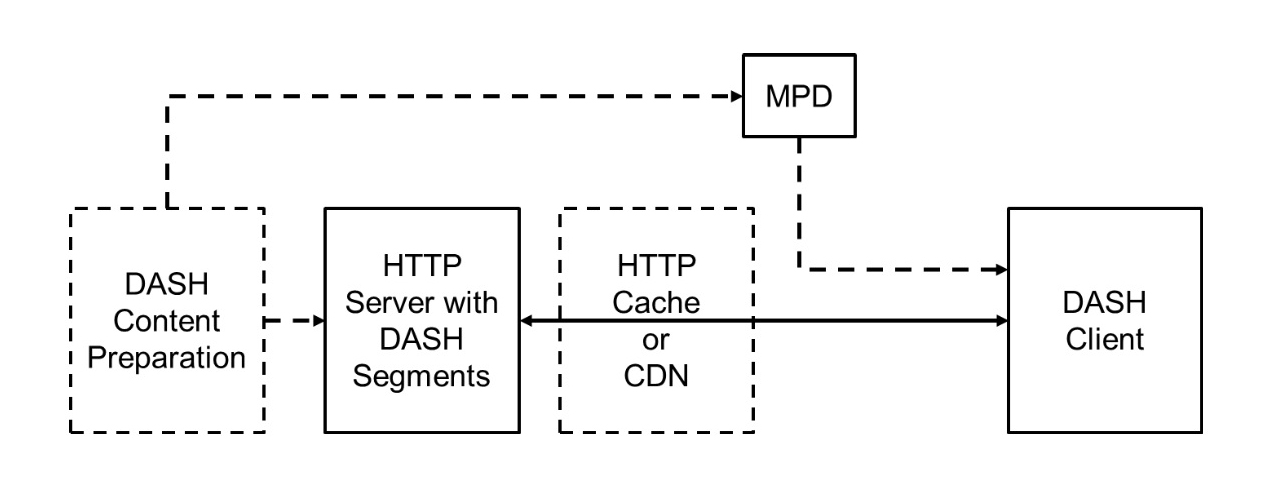

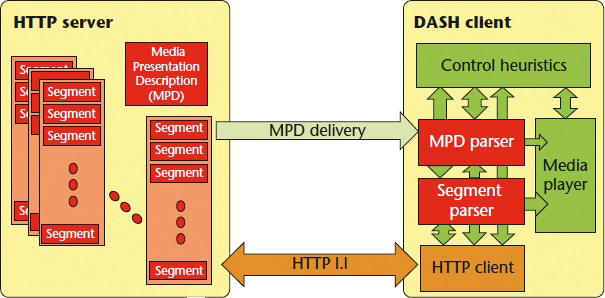

Working Principle:

MPEG-DASH works the same way as HLS and sends short, media segments over HTTP (port 80) or HTTPS (port 443) for easy firewall traversal. It uses an MPEG2-TS transport stream container with a configurable media segment duration, as well as a configurable playlist size for reassembling the media segments at the ingestion server. Fragmented MP4 is supported.

#Conceptual Architecture of MPEG DASH

#The MPEG-DASH Standard for Multimedia Streaming Over the Internet

Note: Use MPEG-DASH to stream to a CDN that does not support RTMP when low latency isn’t a requirement. It’s important to note that RTMP is already being deprecated by more and more CDNs. DASH is also well suited to securely stream corporate training and town halls over private LANs when low latency isn’t a requirement and network conditions are poor.

● Multiple audio tracks for one video track for multilingual productions.

● Inclusion of metadata and other types of embedded content.

● Digital rights management (DRM) support.

● Send multiple versions of the stream at different resolutions and bitrates so that viewers can select the quality that suits their network conditions or screen size.

● Scalability is much easier and cheaper for HLS and MPEG-DASH than for RTMP. And RTMP usually requires IT network ports to be manually opened in order to traverse firewalls.

Note: If latency or poor network conditions aren’t an issue, then HLS or MPEG-DASH beats out SRT protocol. Adaptive HTTP-based streaming protocols deliver the best possible video quality to viewers with different network conditions and are more straightforward to set up than SRT protocol.

14. True Things about the SRT Protocol

1. SRT protocol is an open-source solution that has been integrated into multiple platforms and architectures, including hardware-based portable solutions and software-based cloud solutions.

2. SRT protocol can work well on connections with delays ranging from a few milliseconds to a few seconds. Can handle long network delays.3. SRT protocol has nothing to do with load. Any type of video or audio media, or indeed any other data element that can be sent using UDP, is compatible with SRT. Support multiple stream types.

4. SRT protocol sending supports multiple concurrent streams. Multiple different media streams such as multiple camera angles or optional audio tracks can be sent via parallel SRT streams sharing the same UDP port and address on a point-to-point link.

5. The handshake process used by SRT protocol supports outbound connections without the need to open dangerous permanent external ports in the firewall, thereby maintaining the company's security policy. Enhanced firewall traversal.

6. The SRT protocol endpoint establishes a stable end-to-end delay profile, eliminating the need for downstream equipment to have its own buffer to deal with changing signal delays. The signal time is accurate.

CDNs like Akamai have already announced they’re ending support for RTMP. It’s old and expensive to deploy. With new protocols like SRT protocol, HLS, and MPEG-DASH gaining popularity, it’s only a matter of time before RTMP will be a thing of the past.

Note: If low latency is needed and you are streaming over unpredictable networks, then SRT protocol is the streaming protocol of choice. SRT protocol establishes its own connection for packet recovery that is way more efficient than TCP. That enables SRT protocol to deliver near real-time, two-way communications between a host and a remote guest. And you can tune the latency to adjust for network conditions.

Additional Type Articles

m3u Extension - List of programs that can open .m3u files

Guideline of Manually load IPTV .M3U/.M3U8 Playlist Streams on Various Devices

How to DIY your FM Radio Antenna|Homemade FM Antenna Basics&Tutorials

FMUSER STL Link - All You Need On Studio To Transmitter Link Equipment

For more SRT protocol supported products info, please contact me in Web | App

My whatsapp +8618319244009

Or contact me by sending Emails|NOW

If you think this article is helpful, welcome to forward and have a good day!